Trends

Creating systems based on GPU is costly. GPU manufacturers do not allow the use of cheap consumer cards in data centers. Specialized equipment is more expensive and consumes significant amounts of electricity.

Cloud computing can be the solution. But vGPU-based systems are still less popular than on-premise. In 2018, NetApp interviewed hundreds of IT professionals from companies that use graphics processors in their work. About 60% of respondents said that they buy equipment. Only 23% chose the Cloud option.

The reason for this discrepancy is the well-established view that virtualization reduces the performance of devices. In fact, in modern systems, vGPU is almost as good as bare-metal (the difference is only 3%). This is possible thanks to new hardware and software solutions offered by the developers of graphics cards and equipment for data centers.

Software platforms

In February of this year, VMware announced an update for the ESXi hypervisor. It has increased the speed of the virtual GPU with the help of two innovations. The first is that ESXi added DirectPath I / O technology. It allowed the CUDA driver to communicate with the GPU bypassing the hypervisor and thus transfer data faster. The second innovation is that vMotion has been removed from ESXi. In the case of a GPU, this procedure is not necessary, since the VM always works with one accelerator. This solution has reduced the transaction costs of data transfer.

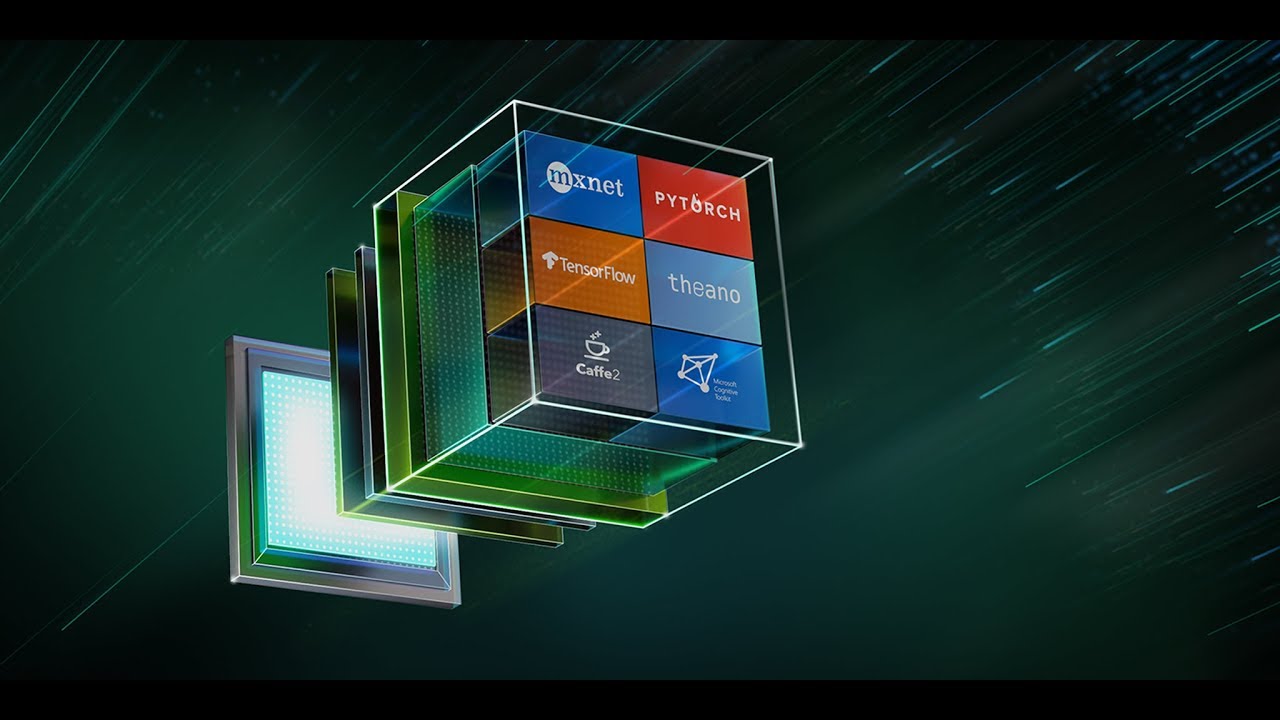

Another platform released Nvidia. The company introduced an open source solution called Rapids. It combines several libraries for working with CUDA architecture. These libraries help with the preparation of data for training neural networks and automate the work with Python-code. All this functionality simplifies working with machine learning algorithms.

A similar platform called ROCm is created in AMD. The solution combines many libraries for the organization of high-performance computing. It is based on a special C ++ dialect called HIP. It simplifies the execution of mathematical operations on the GPU. It is about working with sparse matrices, fast Fourier transform and random number generators. Also in the platform is the technology of SR-IOV (single-root input / output virtualization). It shares a PCIe bus between virtual machines, which speeds up data transfer between cloud-based CPUs and GPUs.

Open source solutions for accelerating GPU computing, like ROCm and Rapids, allow data center operators to more efficiently use computational resources and get more performance from the available hardware. Speaking of iron …

Hardware platforms

GPU developers are releasing hardware solutions that improve the performance of vGPU clusters. For example, last year Nvidia introduced a new graphics processor for Tesla T4 data centers based on the Turing architecture. Compared to previous-generation GPUs, T4 performance for 32-bit floating point operations has increased from 5.5 to 8.1 teraflops (p. 60 of the document).

The new architecture has increased accelerator performance by separating work with integer and floating-point operations. Now they are executed on separate cores. Also, the developer combined the shared memory and L1 cache into one module. This approach has increased the throughput of the cache and its volume. T4 cards are already being used by large cloud providers.

Perspectives

In the near future, experts expect growth in demand for cloud-based graphics accelerators. This will contribute to the development of hybrid technologies that combine the GPU and CPU in a single device. In such integrated solutions, two types of cores share a common cache, which speeds up the transfer of data between graphics and traditional processors. For these chips, special load balancers are already being developed, which increase the performance of vGPU in the cloud by 95% (slide 16 of the presentation).

But some analysts believe that virtual GPUs will be replaced by new technology – optical chips in which data is encoded by photons. On such devices already run machine learning algorithms. For example, the optical chip of the startup LightOn fulfilled the task of transfer learning several times faster than an ordinary GPU – in 3.5 minutes instead of 20.

Most likely, the first new technology will begin to introduce large cloud providers. Optical chips are expected to accelerate the learning of recurrent neural networks with LSTM architecture and direct-distribution neural networks. The makers of optical chips hope that such devices will go on sale in a year. A number of major providers are already planning to test a new type of processors in the cloud.

What is the result?

It is hoped that such decisions will lead to an increase in the popularity of cloud high-performance technologies and the number of companies processing big data in the cloud will increase.