The virtual assistants sold us a world where the interaction with a machine would be only with the voice and in a natural way. But the reality has been another. In the beginning, many attendees stopped being attractive due to failures of understanding and errors in the requests, so they were relegated to simple helpers that configure alarms or give us the weather forecast. Now with the arrival of artificial intelligence this could change.

Whether Siri, Google Assistant, Cortana or Alexa, they all offer us a form of voice interaction that, in theory, helps us with basic tasks and the promise of doing it with some more complex ones. Bixby is the bet on the part of Samsung in this market so complicated where manufacturers seek to win and dominate more and more sectors, such as home in the case of Amazon Echo, Google Home and Apple HomePod. Now with the recently announced Bixby 2.0, the time has come to analyze and learn a little about the strategy of the Koreans in this market that, they say, is about to explode.

Bixby 1.0: Better to talk than touch

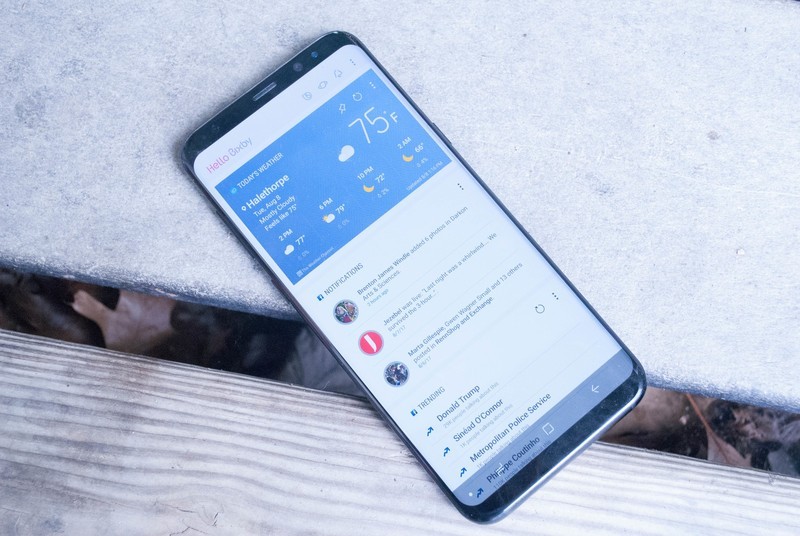

Bixby is just a baby, its announcement came with the Galaxy S8 in March of this 2017 and from the beginning his approach was what caught the attention, since he did not seek to be a Siri or an Assistant, but to go further. From the beginning, Samsung’s main argument has been that Bixby is powered by artificial intelligence, which aims to offer a new type of interaction with the user by being a voice interface that relies on the tactile, that is, if we could touch it, we could also ask for it talking. This is an evolution of that S-Voice that we met for the first time in the Galaxy SIII of 2012.

Image Source: Google Image

Here we must mention that the newly acquired Viv, creator of Siri, did not have time to participate in this first version of Bixby. Despite this, this approach won him good comments, especially for the possibility of doing everything chatting and not through pre-established commands, that is, contextual conversations with cognitive tolerance, which means that the assistant would be able to “learn” of us and act according to what we were looking for. A very clear example is that we could ask him to show us pictures of our brother and send them to our mother, and here Bixby would be able to know who we were referring to without giving him more detail.

Bixby consists of three parts that work together under the same core …

- Bixby Voice: The interface that is activated by voice and works basically in the same way as other artificial intelligence solutions such as Google Assistant or Amazon Alexa, which listens to our voice, interprets the information and returns an action. This is where Samsung seeks to differentiate itself through the so-called ‘contextual awareness’, although its operation continues to depend on an internet connection and content stored in the cloud.

- Bixby Vision: Which is the option integrated to the camera and works in a similar way to Google Goggles. Here we can identify objects just by pointing with the camera of the smartphone and will offer us options depending on what has been recognized.

- Bixby Home: This is integrated to the home screen of our smartphone, and is an option designed for those who can not use the voice service either for pleasure or because it is not compatible in their country. It is a kind of personal feed where we can integrate applications to access this information in one place. This option has been the least attractive of Bixby, where thanks to it can be easily disabled many users have decided to remove it from their screens, although it keeps appearing every time the button dedicated to Bixby is pressed.

To this was added that within a few months of its release, Samsung announced an update that made the button dedicated to Bixby could be deactivated, a move that some saw as defeat given the inability of Koreans to enter the market of attendees virtual Something that now seek to reverse, or at least calm the waters, with the announcement of Bixby 2.0.

Bixby 2.0: The omnipresent assistant

Image Source: Google Image

Samsung mentions that this second version of Bixby will integrate new elements such as ubiquity, personal approach and openness, with which the assistant will be able to expand its reach beyond the smartphone, will improve its ability to “learn” and will open to developers thanks to a new SDK. Something that sounds interesting on paper, the bad news is that we did not have a chance to try it and not even have a demo during the last Samsung Developers Conference. So we’ll have to wait until some time in 2018 to verify that all this is true.

Perhaps one of the most attractive points of Bixby 2.0 is the participation of Viv, who begins to take the reins of development to integrate some of the features we met in 2016 with the impressive prototype of its virtual assistant driven by artificial intelligence. This assistant was able to follow conversations, act “intelligently” according to our location and needs, as well as learn from our actions, do you sound familiar? Yes, they are all the goodness that Samsung sold us with Bixby.

Another key point is that Bixby debuts something they have dubbed ‘Bixby Brain’, with which, according to Samsung, will be able to support more devices, more languages and more services. Broadly speaking, Samsung seems to want a new approach and continuation towards a global assistant that would not be limited to smartphones or speakers, so fashionable nowadays, but to any connected device.

You may also like to read: Skype will finally bots, and will be controlled by Cortana

The challenges and objectives of Bixby

Taking advantage of our visit to San Francisco for the Samsung Developers Conference (SDC 2017), we spoke with some intelligence officers and Bixby within the company, this with the aim of trying to understand what they are doing to win the growing battle within the market of personal assistants, virtual, and now “smart”.

Image Source: Google Image

Brad Park, vice president of mobile communication, as well as some members of Samsung’s strategic intelligence division gave us a brief overview of the challenges and objectives they have with Bixby 2.0, which will be released sometime during 2018.

“Bixby is not a personal assistant, but an intelligent service platform, a new ecosystem that seeks to meet communication and interaction needs within connected devices, while most of the attendees are only designed to do, Bixby is able to “think and learn”, so that in the end we will not be facing a single Bixby, but several, since each one will adapt to the needs of the user within its context”.

One of the novelties of Bixby is that Samsung says that it does not want to have a closed platform that competes with other options, but an open ecosystem ready to collaborate with other brands and can be present in other devices.

“With Bixby we do not want to compete but to strengthen ties, create alliances, that’s why we are working with Google and Facebook, as well as opening up to developers with the new SDK, since the goal is to learn and collaborate, create something together that can meet the needs of the user”.

It should be noted that Bixby is just the first attempt by Samsung to unite its most recent acquisitions such as the case of Viv and artificial intelligence, SmartThings with IoT, Harman with the connected car and, of course, the Galaxy smartphones and the range of connected devices.

Image Source: Google Image

“We want Bixby to be present in as many devices as possible, from televisions, loudspeakers, refrigerators, washing machines, to cars connected with the help of Harman, where the challenge will be to change the paradigm of the use of interfaces, where for example in the car we have it easier because the user knows that he can not be interacting with a screen, unlike a TV or a refrigerator, there is no interface and now we are looking for people to talk to them, come on, it will not be easy but we bet thus”.

“Another challenge is that today the TV user does not usually register and does not take advantage of all the services offered by this device, our idea is that in the future Bixby can identify the user through the voice and know who it is essential when we are talking about a device like the television that is used by several members of the family.This is the real challenge of the “intelligent” assistants, who identify who is speaking to them and act according to their needs with the minimum intervention of the user “.

“Bixby is just growing and we want it to be the nerve center of the connected ecosystem, so it will offer personalization options where, for example, we can program commands to execute a series of previously selected actions, this will help the assistant to know us better and know what to recommend when we ask you to take us to a restaurant, because Bixby would have the ability to know what kind of food is our favorite.”

When we asked them about the security and the architecture they use to give life and guarantee Bixby’s operation, they told us they could not delve into the subject, they only mentioned that the information is stored in the cloud, which in theory makes Bixby do not depend on powerful hardware and, according to the executive, behind this there is a high level of security.

So far everything sounds very good and looks interesting, unfortunately in this SDC 2017 we did not have the opportunity to test the news that Samsung promises with Bixby 2.0, so we will have to be aware of its launch sometime in 2018 to analyze it thoroughly.